By John Doe February 2, 2026

Summary: In this article, we’ll take a look at the freeze issue that comes with high transaction throughput in PostgreSQL, and corresponding solutions.

Table of Contents

Transaction assignments scenario: Use case

Let’s create a table in the PostgreSQL database and insert a sufficient volume of data into it.

CREATE UNLOGGED TABLE t_large (id) AS

SELECT i FROM generate_series(1, 250000000) AS s(i);

Construct a prepared transaction, which will prevent the background autovacuum process from advancing the oldest transaction ID in the system by freezing data.

BEGIN;

INSERT INTO t_large (id) VALUES (250000001);

PREPARE TRANSACTION 't1';

Note: If you need to use prepared transactions, it is recommended that you set the parameter max_prepared_transactions to a value that is at least as large as max_connections. This ensures that each database session can have one pending prepared transaction.

Manually simulate assigning transaction IDs with pg_resetwal, directly advancing the transaction ID to 2.1 billion.

pg_ctl stop

pg_resetwal --pgdata=$PGDATA --next-transaction-id=2100000000

dd if=/dev/zero of=$PGDATA/pg_xact/07D2 bs=8192 count=24

pg_ctl start

With an EXCEPTION clause in a stored procedure to perform a full-table data update, which rapidly consumes the transaction IDs in the system.

SET log_min_messages = 'ERROR';

SET client_min_messages = 'ERROR';

DO $$ DECLARE

blkid integer := 0;

posid integer := 1;

BEGIN

LOOP

BEGIN

PERFORM 1 FROM t_large WHERE ctid = (blkid, posid)::text::tid FOR UPDATE;

EXCEPTION WHEN OTHERS THEN

RAISE WARNING 'Exception for row: (%, %)', blkid, posid;

END;

IF FOUND THEN

posid := posid + 1;

ELSIF posid = 1 THEN

EXIT;

ELSE

blkid := blkid + 1;

posid := 1;

END IF;

END LOOP;

END$$;

After the above commands have run for a period of time, the database throws an error: “database is not accepting commands”, and PostgreSQL enters an outage state.

ERROR: database is not accepting commands that assign new transaction IDs to avoid wraparound data loss in database "postgres"

HINT: Execute a database-wide VACUUM in that database.

You might also need to commit or roll back old prepared transactions, or drop stale replication slots.

Transaction assignments scenario: How it works

Here’s what happens to the global transaction ID in the database system, when we run the stored procedure in PostgreSQL:

- PostgreSQL uses subtransactions to implement the exception-handling in the stored function.

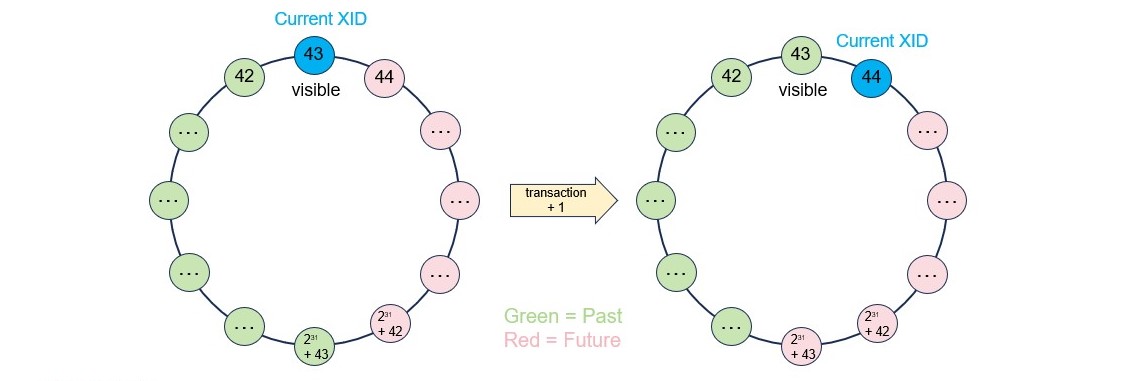

- Each transaction/subtransaction has a transaction ID, which is a bit like the clock time of a database system.

- Each tuple has two system columns, xmin and xmax, which hold the transaction/subtransaction IDs that inserted and deleted them, respectively.

- The maximum number of transaction IDs is around 2 billion, and after beyond that number, the multi-version concurrency control will not work.

Transaction assignments scenario: Thought

Impact of the issue

- The rows in all tables need to be scanned periodically for transaction ID freezing. The interval is controlled by the autovacuum_freeze_max_age parameter, which defaults to 200 million transactions.

- If there are some long-live transactions or slow queries in the system, the transaction ID will be exhausted and the database will shutdown.

- The system needs to store and manage the state of all unfrozen transactions. The wait events for accessing the cache in pg_xact, pg_subtrans and pg_multixact are prone to occur, and the system performance will be worse.

Freeze Frequency vs. TPS

In PostgreSQL, the frequency of freezing tuples (via VACUUM) is directly proportional to the number of transactions per second (TPS) that modify data (INSERT, UPDATE, DELETE). Because PostgreSQL uses 32-bit transaction IDs (XIDs) that wrap around every ~2 billion transactions, high-throughput systems (high write TPS) require more frequent freezing to prevent data loss (wraparound).

The time taken to reach the maximum autovacuum_freeze_max_age decreases as write TPS increases.

| TPS (Writes) | Time to reach 2 billion limit | Time to freeze |

|---|---|---|

| 1,000 | 23 days | 2.3 days |

| 10,000 | 2.3 days | 5.5 hours |

| 100,000 | 5.5 hours | 33 minutes |

| 1,000,000 | 33 minutes | 3.3 minutes |

Note: These figures assume continuous, high-volume write activity and are, in some cases, theoretical to show the impact of extreme transaction throughput.

Solution

- Add a monitoring metric and alarm, to check transaction ID exhaustion.

- Add a monitoring metric and alarm, to check uses of subtransactions.

- Add a monitoring metric and alarm, to check long running transactions.

The solution by Redrock Postgres

Redrock Postgres implemented subtransactions based on the undo record position, these subtransactions are only temporary marks in a transaction. Subtransactions use the same transaction ID as the owning transaction block, they do not need to assign their own transaction IDs, and have no risk to the system.

Besides, in Redrock Postgres, the internal transaction ID is 8 bytes long, and can be used almost infinitely without worrying about running out of transaction IDs. Each transaction will record undo logs, which can be used for rolling back modified data and transactions, making transaction status maintenance and management simpler and more stable.